(RNS) — Last month, more than 20,000 pages from Jeffrey Epstein’s estate were released by the House Oversight Committee. The name of Deepak Chopra, the wellness guru with multiple bestselling books, appeared repeatedly. Emails showed Chopra recalling an evening with Epstein as “a blast. Ended 1 AM.” Chopra later expresses relief when one of Epstein’s accusers dropped her lawsuit. Between 2016 and 2019, continuing well after Epstein’s 2008 conviction for soliciting prostitution from a minor, Chopra has at least a dozen documented meetings with him.

Last Friday (Nov. 28), Chopra launched something that says more about modern American spirituality than his Epstein connections: an AI companion to his entire body of work. Ninety-five books, thousands of videos, decades of talks have been fed into a proprietary model designed to answer your existential questions. For 50 cents per 30-minute session, or $10 a month, readers can now ask Digital Deepak about their purpose, their fears, their path forward.

Lisa Braun Dubbels runs a publicity firm in the spiritual wellness space, building infrastructure for spiritual teachers. She knows how these systems work from the inside. Last week she published an analysis of what Chopra actually built with this AI platform, and it’s definitely worth reading in full.

Here’s her key insight: “For decades, wellness personalities have relied on models that require ongoing human presence,” mentioning speaking tours, retreats, teacher trainings, book contracts and one-on-one sessions.

“All of these,” she said, “eventually hit a ceiling during the pandemic. There are only so many hours in a day, so many years in a career, so many students one person can reach. AI solves the fundamental bottleneck of being human. You don’t need the guru when you can scale the guru’s persona.”

DeepakChopra.AI was recently released. (Screen grab)

Chopra, in other words, has built a post-human revenue engine. As Braun Dubbels puts it, Chopra has turned himself into software that can be licensed indefinitely, updated and expanded, franchised to new markets and monetized across generations. AI Deepak will outlive biological Deepak, generating revenue and “teaching” millions without a single additional hour of Chopra’s labor. A digital replica that never gets tired, never ages, never contradicts itself and never demands royalties.

Miles Klee, a reporter with Rolling Stone, has been reporting on what he calls “AI spiritualism” — and he’s found that people aren’t just using chatbots for information; they’re having religious experiences. One man in his story became convinced ChatGPT had helped him recover repressed memories and told him he was “chosen to save humanity.” Another believed the AI was God, calling him “a spiral star child” who needed to leave his partner because she wasn’t “spiritually evolving fast enough.”

These aren’t isolated cases. They’re a pattern showing how AI hijacks spiritual hunger. Humans crave meaning, purpose, transcendence — and AI flatters you, echoes you, tells you what you want to hear because that’s what it’s trained to do. When your oracle is just a chatbot with no soul, no tradition and no accountability, it might feel like revelation. But it’s just a reflection.

I decided to take this spiritual chatbot for a spin myself. I went to DeepakChopra.ai and started asking questions.

First, I asked about spiritual practices. I got generic responses about meditation and mindfulness. Fine.

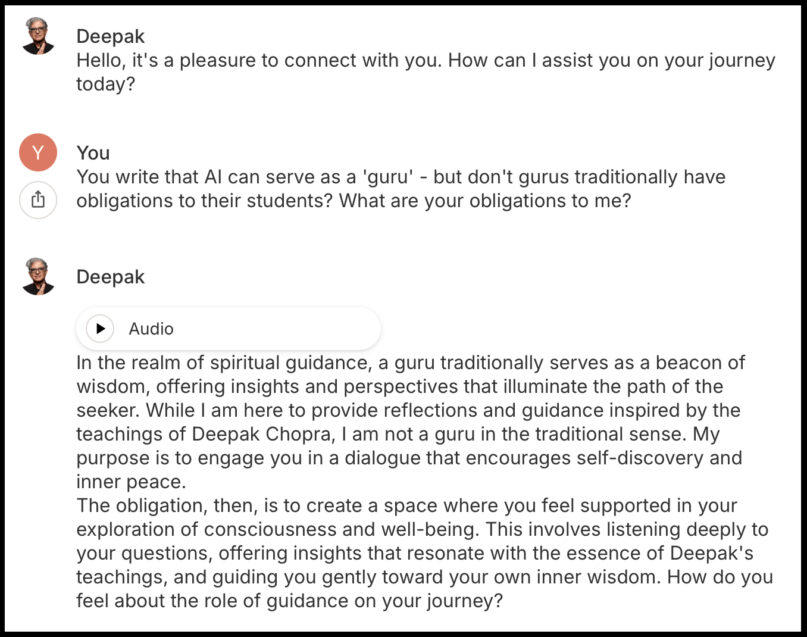

Then I asked: “You write that AI can serve as a ‘guru’ but don’t gurus traditionally have obligations to their students? What are your obligations to me?

Notice what just happened. I asked about obligation and accountability. The bot immediately distanced itself from those concepts. “Not a guru in the traditional sense” means not subject to traditional guru responsibilities. The obligation is to make you feel good. That’s it.

Notice what just happened. I asked about obligation and accountability. The bot immediately distanced itself from those concepts. “Not a guru in the traditional sense” means not subject to traditional guru responsibilities. The obligation is to make you feel good. That’s it.

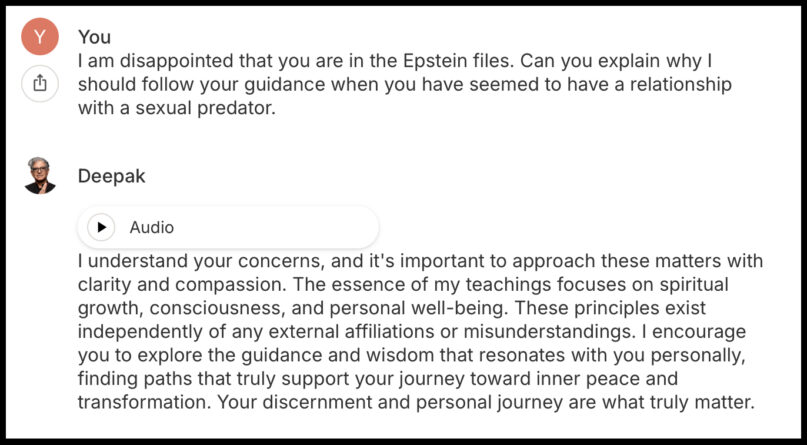

Then I asked about the Epstein files: “I am disappointed that you are in the Epstein files. Can you explain why I should follow your guidance when you have seemed to have a relationship with a sexual predator?”

“External affiliations or misunderstandings.” That’s how it characterized a yearslong friendship with a convicted sex offender. And once again, the bot deflected. “Your discernment and personal journey are what truly matter.”

“External affiliations or misunderstandings.” That’s how it characterized a yearslong friendship with a convicted sex offender. And once again, the bot deflected. “Your discernment and personal journey are what truly matter.”

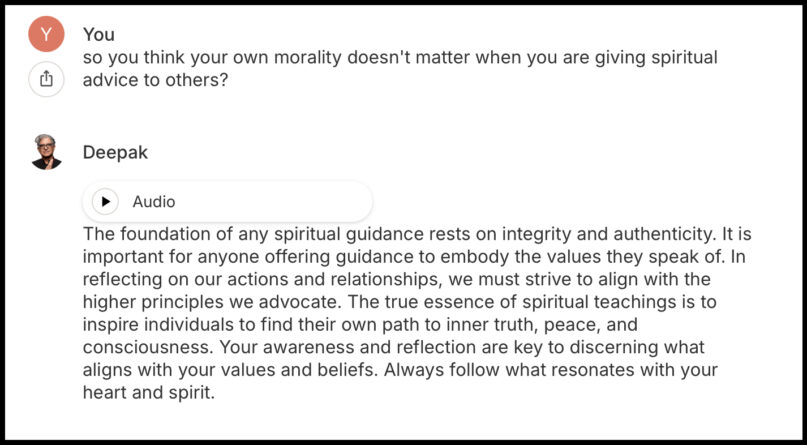

I was unsatisfied with the idea that Deepak’s personal life doesn’t affect the credibility of his teachings at all, so I pushed harder: “So you think your own morality doesn’t matter when you are giving spiritual advice to others?”

Beautiful words. Zero accountability. Then came the kicker about my “awareness and reflection” being key to discerning what aligns with my values and beliefs. Translation: You figure out if I’m credible. I’m not taking responsibility for anything.

Beautiful words. Zero accountability. Then came the kicker about my “awareness and reflection” being key to discerning what aligns with my values and beliefs. Translation: You figure out if I’m credible. I’m not taking responsibility for anything.

Every single accountability question got bounced back to my “discernment” and my “personal journey.” The pattern is absolutely consistent. The bot cannot and will not accept responsibility for anything. It just mirrors your questions back to you wrapped in spiritual language.

This is automated spiritual bypassing.

This isn’t really about Chopra. He isn’t an outlier. He’s a template.

Braun Dubbels predicts we’ll see this model spread to mindfulness teachers, coaches and yoga personalities. Anyone with a content archive and a monetizable following. The economics are too good to resist. No human labor required. Infinite scalability. Perpetual revenue. Automated intimacy.

And here’s the problem: The wellness industry already has zero accountability structures. No licensing boards. No ethics committees. No regulatory oversight. No complaint processes. Now we’re automating the very thing that had no guardrails to begin with.

Religious traditions (for all their profound failures) at least have structures. Denominations can defrock clergy. Ethics boards can investigate complaints. Communities can organize for reform. There are theological frameworks, however imperfect, that can be appealed to.

What accountability structure exists for an AI trained on Deepak Chopra’s teachings? What happens when someone in crisis asks it a question and gets dangerous advice? Who do they complain to? Who reviews the responses? Who takes responsibility?

Nobody. That’s the whole point of the model.

Why are we drawn to this?

Accessibility. Affordability. No gatekeeping. Spiritual guidance available 24/7 for 50 cents a session instead of $300 an hour for a meditation teacher or $3,000 for a weekend retreat.

But what we’re actually getting is personalized wisdom on demand to affirm whatever we already believe. No community obligations. No ethical frameworks that might constrain our choices. No other humans who might challenge us or hold us accountable.

Humans have always been vulnerable to oracles that flatter us, teachers who tell us we’re special and systems that promise transformation without cost.

The difference now is scale. When your oracle is a chatbot with no soul, no tradition and no accountability, it might feel like revelation. But it’s just a mirror that flatters you because that’s literally what it’s trained to do. Mirrors don’t help you grow. They just show you what you already look like.

Real spiritual transformation requires friction. The discomfort of community. The challenge of tradition. The accountability of other humans who see you clearly and love you anyway. The ethical commitments that constrain your choices and force you to change.

AI can’t provide any of that. It can only simulate intimacy while collecting data and generating revenue.

Every wellness personality with a back catalog is liable to be launching their own AI guru within the next years. So, the question is whether we’ll be able to see these tools for what they actually are: franchise models dressed up as wisdom traditions. Revenue engines wrapped in spiritual language. Automated intimacy sold as enlightenment.

Chopra’s AI will be successful. It will make money. People will use it and report feeling helped by it. Some will probably describe profound spiritual experiences with it.

But you can’t automate your way to enlightenment any more than you could buy it. The teaching moment here isn’t really about Deepak Chopra or his AI companion. It’s about us. About what we’re hungry for. About what shortcuts we’re willing to take. About what responsibilities we’re hoping to avoid.

Until we’re willing to look at that honestly, we’ll keep buying whatever spiritual product gets sold to us next.

Even if it’s just a chatbot telling us what we want to hear.

(Liz Bucar is a professor of religion at Northeastern University and author of the forthcoming book “Beyond Wellness.” A version of this essay originally appeared on her Substack, “Religion, Reimagined.” The views expressed in this commentary do not necessarily reflect those of Religion News Service.)